Ingesting data into big query from firestore

Link to access Big query: https://console.cloud.google.com/bigquery?project=biddirect-2

Enqueuing data using trigger functions

-

For ingesting data from a firestore collection to bigquery we utilize firebase trigger functions,cloud task queue and a service container deployed in cloud run Let’s consider accounts collection as an example.

/accounts/ALRbwQqIVhr0wBjWu0Wp

This firestore path refers to the accounts collection in firebase. account -> accountId

-

A trigger function is created for each collection which needs to be migrated to bigQuery. For this example we have bqDataLake.accountsOnWrite function.

-

Whenever a document is created or updated in this collection, the trigger function adds the entire document data as a payload to the Firestore reference:

/cloudTasks/{year}/{month}/{documentId}in the ancillary database -

The firestore reference

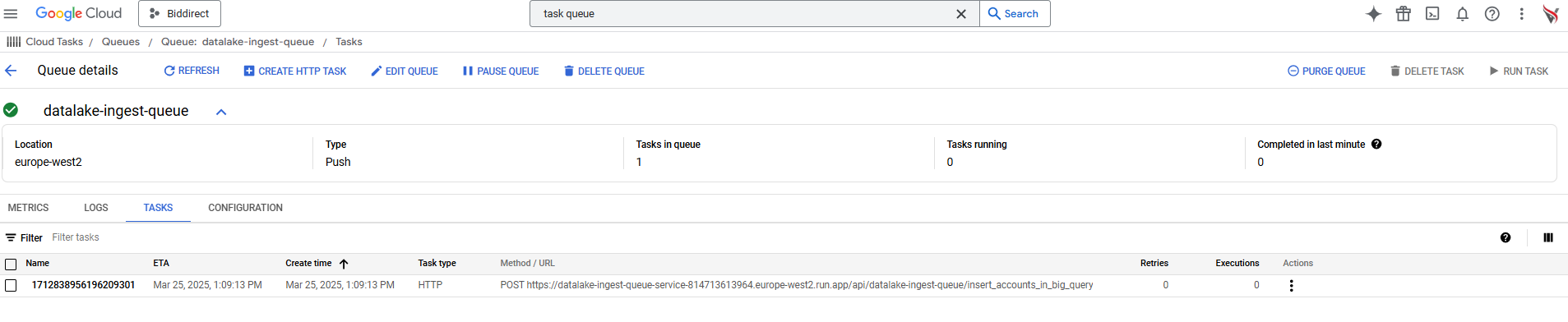

/cloudTasks/{year}/{month}/{documentId}has a trigger function which enqueues the payload to datalake-ingest-queue which is a cloud task queue.

Processing and ingesting data to bigQuery using datalake ingest queue service

- The tasks enqueued in the datalake-ingest-queue execute a post request with an url pointing to the datalake-ingest-queue-service container.

-

For example when this post request

https://datalake-ingest-queue-service-814713613964.europe-west2.run.app/api/datalake-ingest-queue/insert_accounts_in_big_queryis executed it processes the payload and inserts the data into accounts_latest and accounts_changelog tables. -

Any error that occurs during this process will be captured and logged to

/logs/dataLake/{year}/{month}/{date}/{collectionName}/errorin the ancillary database for this example the errors will be logged to/logs/dataLake/{year}/{month}/{date}/accounts/errorcollection in the ancillary db. -

Once data ingestion is successful for a document a log is added to the raw collection under the same path in the ancillary database

/logs/dataLake/{year}/{month}/{date}/{collectionName}/raw

Status: Accepted

Category: Protected

Authored By: Vignesh Kanthimathinathan on Mar 25, 2025

Revisions